Spring 2012:LFM Proposal

Contents

Abstract

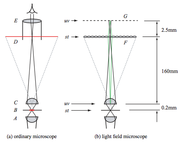

A light field microscope (LFM) is capable of producing a 3-dimensional rendering of a sample using information from a single image. The addition of a microlens array, a grid of lenses with diameters on the microscale, to a traditional illumination microscope grants this capability. Here we propose a low-cost light field fluorescent microscope with associated code and recommended experiments for use in a teaching undergraduate laboratory.

Background and Motivation

Traditional light microscopy has been used to illuminate miniature biological specimens since the mid-1600s. Since, researchers have come to realize and (attempt to) circumvent the inherent limitations of the technique. One such limitation is that of superimposed features on the captured image. With no depth information from the subject, it is difficult to extract data specific to the focal plane from the rest of the image.

The light field microscope allows angular resolution information to be recorded in a single image. The light field is a function that represents the amount of light traveling in every direction through every point in space. By recording a sample’s light field, one can produce perspective and parallax views of the specimen. This is accomplished by inserting a microlens array in a conventional bright field microscope, and analyzing the images in silico. A microlens array is an optical component containing a spread of thousands of lenses with diameters in the micron scale. Applying 3D deconvolution to the focal stacks from the array produces a series of cross sections which allow for a 3D reconstruction of the sample.

Light fields and lens arrays have their roots in photography [Lippman 1908][1] and have evolved as the technology for manufacturing mini and microlenses improved. Lens arrays have been used to build a microscope with an ultra-wide field of view [Weinstein 2004][2], and have been recently utilized in a commercial light field camera [Lytro.com, Ng et al. 2005][3]. The first LFM built to capture multi-viewpoint imagery was described in 2005 [Levoy et al. 2005][4] along with open source software to assist in the deconvolution, image stacking, and 3D volume rendering process. The setup was later improved with a second microlens array for angular control over illumination [Levoy et al. 2009][5].

Confocal also provides a method of visualizing 3-dimensional images and has better spatial resolution than light field microscopy, but images require scanning the entire sample at every depth of interest. Light field has the advantage of capturing relative depth information in a single photo. This additional visual information comes at a cost, however. Diffraction places an upper limit on the lateral and axial resolution of the images. We thus sacrifice spatial resolution to obtain angular resolution. Visualizing smaller and smaller, run into practical limitations of NA, superimposed features, and limited depth of field

Light propogating from an object through a lens is bandlimited by NA/lambda

Theory

Optical Elements

Diffraction places an upper limit on the lateral and axial resolution of the images. Because we lose this spatial resolution, we must be careful to keep a minimum limit on the feature size and field of view of the sample, and choose a sensor camera with the size and number of pixels optimized for the image. We want the sensor pixel size to be roughly half the size of the diffraction spot size of the objective we choose, not too small because we lose sensitivity and not too large because we lose information. The number of pixels should be as many as possible, but not so many that it drives up the cost of the instrument. The objective lens should be chosen based on the desired field of view. It should have the highest NA that is appropriate for the specimen and an adequate field of view. We will plan to use the objectives available in the 20.345 laboratory.

The microlens array has two major parameters: the pitch and focal length. The pitch should be roughly equal to or smaller than the feature size multiplied by the magnification of the objective. A larger pitch would give better angular resolution and allow for a more distinct focal plane in refocusing. The microlenses each convey an image of the back focal plane to the camera, and therefore the focal length of the lenses affect the size of the images captured on the camera. Too short of a focal length will yield small circles, and thus even more resolution will be lost on the camera pixels. Too long focal lengths yield large circles, but the projected images may overlap and the information will no longer be useful in postprocessing.

The Practical Introduction to Light Field Microscopy [Zhang 2010][7] selected a 40X/1.3NA oil objective in combination with a 100 micron pitch microlens array with ~1500 micron focal length to image 5 micron neurons.

Software Elements

The captured light field is a four-dimensional field, composed of two-dimensional spatial vectors and two angular dimensions. A synthetic aperture can be created for this field in order transfer from 4-space to 2-space by taking slices of the 4D Fourier Transform of the field [Ng 2005]. The slice is defined by

$ P_\alpha\! [G](k_x, k_y) = \frac {1}{F^2} G(\alpha\ k_x, \alpha\! y, (1-\alpha\!) k_x, (1-\alpha\!) k_y) $

where P is the chosen plane in 4D Fourier Space, G, F is the film plane depth, is a chracterization of the focusing of the camera, and kx and ky are dimensions in frequency space. By taking the 2D Inverse Fourier transform of P, choosing and F, an image is rendered from the light field. This method can be used to creat arbitrary synthetic apertures by choosing planes in the Fourier space model of the lightfield.

Experimental Goal

The LFM resulting from this project should be fairly versatile and able to image most typical subjects. Experimentally, we want to test the limits of imaging with our LFM. The main axes of variation for different configurations of microscope and different subjects for a microscope like this are depth resolution, spatial resolution, and performance with thick media.

Optical Performance Calibration

To measure light field resolution, we will use the Air Force Target and Ronchi Ruling slide. We will focus the bars, and then lower the stage in increments, taking a light field image at each stop. The point at which synthetic refocusing in post-processing fails will determine the far side of our depth of field.

3D Reconstruction

In order to generate a three-dimensional mapping near the primary focal plane, we will algorithmically separate rays into planes of origin, creating an image stack. This stack effectively contains a depth mapping for the subject. The system of selecting rays is similar to a multiplexed confocal setup, looking at a stack of planes instead of a single plane.

Development Plan

There already exists comprehensive research on both light field sensing/processing and confocal microscope design. We plan to integrate the two, so the main development challenges lie in creating effective separate parts and interfacing the parts. In order make testing the sensor and the microscope appartus more constrained, the sensor will be built and tested in a simple environment before being used in a confocal-like apparatus. Testing the apparatuses will be comprised of taking ray bundles of different samples and evaluating the results. Development will be complete when the microscope is able to readily produce confocal-like images.

Once built, the microscope will have a certain operation depth range and will also have other restrictions on its ability to capture ray bundles and output images. We plan to first test these parameters through testing the microscope with a sample of silica microbeads suspended in a clear gel. This allows for a fairly low-complication capture and will hopefully demonstrate any quirks of the setup. We also want to inspect the microscope’s maximum working depth in materials of varying opacity. It is expected that, in a fully transparent material, max working depth will be the entire depth of the material. However, with increasing opacity, we expect the working depth to decrease. At maximum opacity, the expected max working depth would be at the surface of the sample. The max depth would be measured through capturing data for samples of gels of varying opacity.

This project is restricted to nine weeks of work and experimentation. We have set aside three weeks for experimentation, with the seven preceding allocated to constructing the apparatus and a method of processing the data from the apparatus.

Weekly Goals

By the end of Week 1, we intend to have gathered the necessary equipment and materials, especially the microlens array and camera. We also intend to start assembly of a very simple brightfield transmittance microscope which will be used to test our processing of the light field.

By the end of Week 2, the simple microscope and the light field imager (microlens array and camera) will be completely built and transmitting. This is an important milestone, since it allows focusing work on getting the imager to work with software in the upcoming weeks.

In Weeks 3-4, we plan to focus solely upon transforming the ray bundle from the lightfield imager into a coherent and sensical image stack. We intend to be able to choose arbitrary synthetic apertures for any image and to focus on any arbitrary plane in the lightfield’s capacity. At the end of week 4, the light field setup should be able to function to the full specifications of the final microscopes.

By the end of Week 5, we want to have built most of the final confocal-type microscope. By this time, all light paths should be set up for the microscope, and the apparatus should only be missing glass optics and the camera.

By the end of Week 6, we plan to finish confocal-type light field microscope by installing all lenses, mirrors, and objectives, as well as the lightfield imager. We intend to test and tweak the software to work in the new apparatus, though it should theoretically be a small change.

Weeks 7-9 will be devoted to experimentation with the system.

Cost

There are two major investments in this project: a microlens array and an appropriately specified camera. The sort of microlens array required is available from a variety of retailers, including ThorLabs. We are interested in an array of ~150µm-diameter lenses, reasonable pitch, and a displacement angle of at least ±5º. We are trying to maximize the number of rays captured by the camera (rays captured is proportional to number of microlenses on the sensor) and to allow for a good amount of spatial resolution for every ray (proportional to the number of pixels under a single lenslet). This process sacrifices sensor spatial resolution for resolution in the depth axis. Because of this, we require a camera with ~7µm pixels and spatial resolution of over 5 megapixels. Spatial resolution of the output of the light field processing will vary based on depth chosen, but with a 5 megapixel sensor, the minimum resolution out will be around 0.3 megapixels. The camera and microlens array are the only pieces of equiptment that need to come from outside sources, since the rest of the materials will be available from lab supplies. The rest will consist of optics supplies for building microscopes, both a brightfield transmittance microscope and a confocal-like microscope.

References

- ↑ G. LIPPMAN, Epreuves réversibles donnant la sensation du relief, J. Phys. 7 (4) (1908) 821–825.

- ↑ WEINSTEIN, R.S., DESCOUR, M.R., et al. 2004. An array microscope for ultrarapid virtual slide processing and telepathology. Design, fabrication, and validation study. Human Pathology 35, 11, 1303-1314.

- ↑ NG, R., LEVOY, M., BREDIF, M., DUVAL, G., HOROWITZ, M., HANRAHAN, P. 2005. Light Field Photography with a Hand-Held Plenoptic Camera. Stanford Tech Report CTSR 2005-02.

- ↑ Levoy, Marc, Ren Ng, Andrew Adams, Matthew Footer, and Mark Horowitz. "Light Field Microscopy." ACM Transactions on Graphics 25.3 (2006): 924. Print.

- ↑ Levoy, M. Zhang, Z. McDowall, I. Recording and controlling the 4D light field in a microscope. Journal of Microscopy, Volume 235, Part 2, 2009, pp. 144-162.

- ↑ Light field microscopy - Stanford 2006 (pdf)

- ↑ ZHANG, ZHENGYUN. "A Practical Introduction to Light Field Microscopy." (2010). A Practical Introduction to Light Field Microscopy. Web. 10 Mar. 2012.